The Enterprise AI Challenge

Enterprises face escalating API token costs, vendor lock-in risks, and unpredictable model performance in the rapidly evolving GenAI landscape. ELLY removes these barriers through intelligent, dynamic routing and precise real-time model evaluation, directly translating complexity into business value.

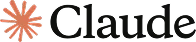

Where ELLY Fits in Your Business Stack

ELLY sits between your business applications and Large Language Models (LLMs), acting as an LLM orchestration layer. Developers store their API keys in ELLY’s secure vault, configure application-specific profiles to balance quality and cost, and integrate a single Alkitech API key—streamlining LLM usage management within their stack.

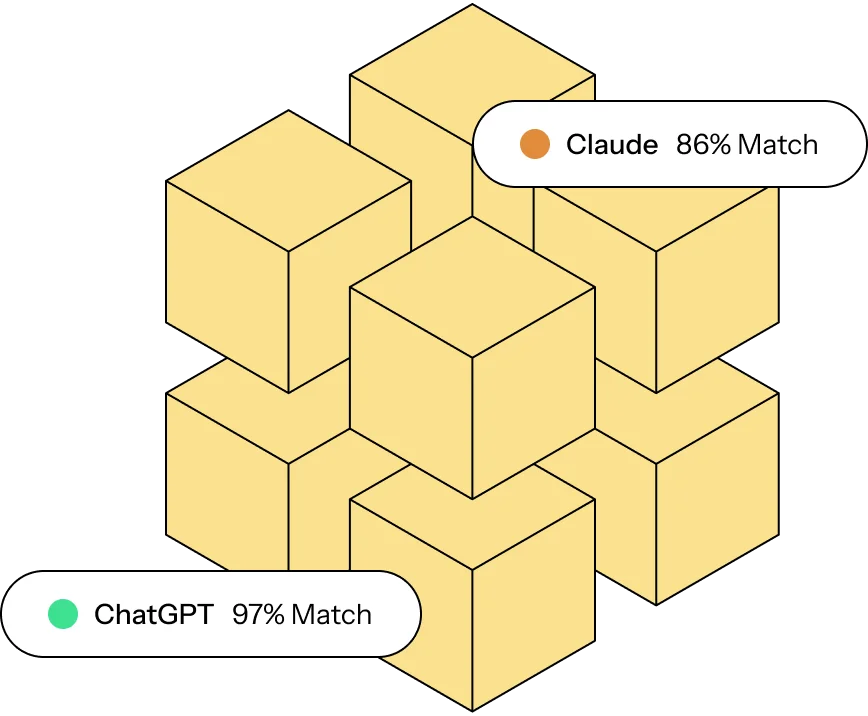

Intelligent Model Selection

Whether it’s ChatGPT, Claude, Gemini, or open-source LLMs, ELLY continuously evaluates and selects the best model for each use case. That means smarter performance, lower costs, and no more guesswork.

Cost-Smart Routing

ELLY routes simpler tasks to more affordable models, reducing typical enterprise AI costs by up to 80%, saving customers thousands monthly.

Real-Time Model Evaluation

ELLY continuously evaluates model reliability, accuracy, and performance metrics, ensuring optimal and cost-efficient model selection for each prompt.

Intelligent Prompt Analysis

Before routing, ELLY quickly scans each prompt to understand its intent and complexity, ensuring it’s matched with the most effective AI model.

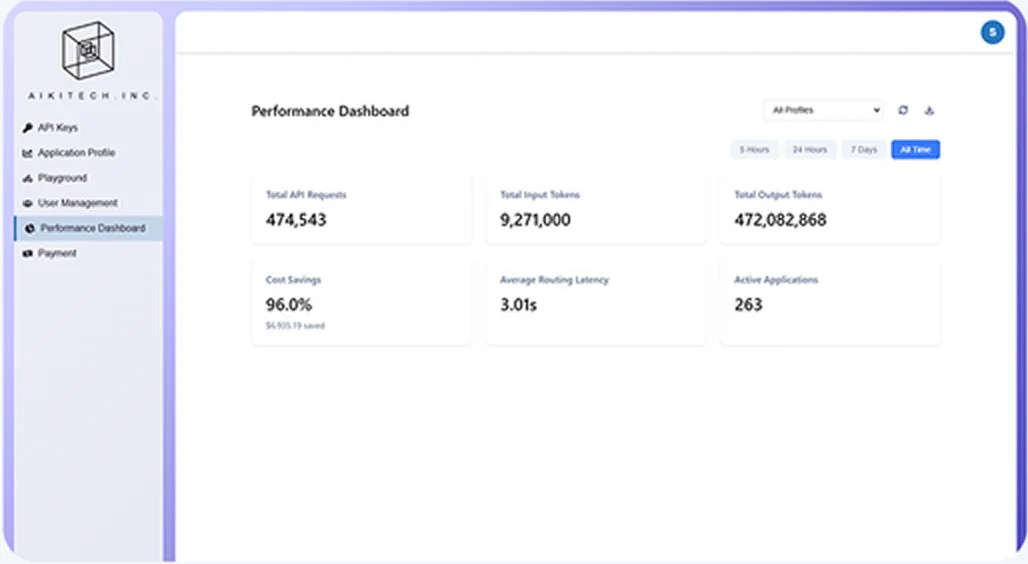

All-in-One Platform for AI Ops

ELLY combines secure API key management with a unified dashboard for tracking performance, controlling access, and streamlining every aspect of your LLM orchestration—all in one place.

Privacy-First Architecture

ELLY is designed with zero data retention. Prompts and outputs are never stored—keeping your enterprise data private, secure, and compliant.

Built-In Resilience & Scalability

ELLY is cloud-native and containerized, supporting high availability and elastic scaling. Built-in multi-vendor failover ensures continuity and prevents vendor lock-in.

Enterprise-Grade RBAC

Granular role-based access control (RBAC) lets you manage user permissions at scale—ensuring compliance and operational control across distributed teams.

Adaptive Hallucination Filtering

ELLY continuously evaluates models against benchmarks, routing prompts to models with the lowest hallucination rates—improving accuracy and reliability in outputs.

Enterprise AI That Solves Real Problems

From customer support and data analysis to specialized, tailored scenarios like municipal threat detection, ELLY powers smarter AI across diverse enterprise applications.

Explore Use Casescoding

Data Analysis

Customer Support

Ticketing Support

Summarization

Tailored Solutions

Scale Faster with Smarter AI Orchestration

Let’s put your GenAI operations on autopilot. Whether you’re looking to cut costs, improve accuracy, or simplify management, ELLY can help you get there—fast.